During the last year, countless tech leaders have talked about the danger that artificial intelligence could pose in the future. Like most people, I laughed at them. After all, do I really think that The Terminator or The Matrix were prophetic? Hardly. But the more I read and the more I pondered it myself, the more concerned I became. Now, I wonder if there’s any way to prevent it from happening at all.

Is it really reasonable to think AI could take over the world? Do we really think code will be so poorly written and that software testers will be so incompetent as to let AI robots kill humanity? Unfortunately, I do. Not intentionally, of course, but bad code that wasn’t properly tested will make it into the wild on robots. Consider all the system updates that have been performed on your computer or your cell phone. Think about all the app updates that happen every single day. Consider all the one star reviews for apps on the mobile stores. AI will be no different.

Consider all the potential causes of AI issues. Developer errors, inadequate testing, corporate release requirements, poorly defined ethics, unforeseen events, etc. Each one of these issues could cause AI to perform in ways it was not intended with potentially catastrophic consequences. Consider government AI being developed by the lowest bidder – wow, that’s scary.

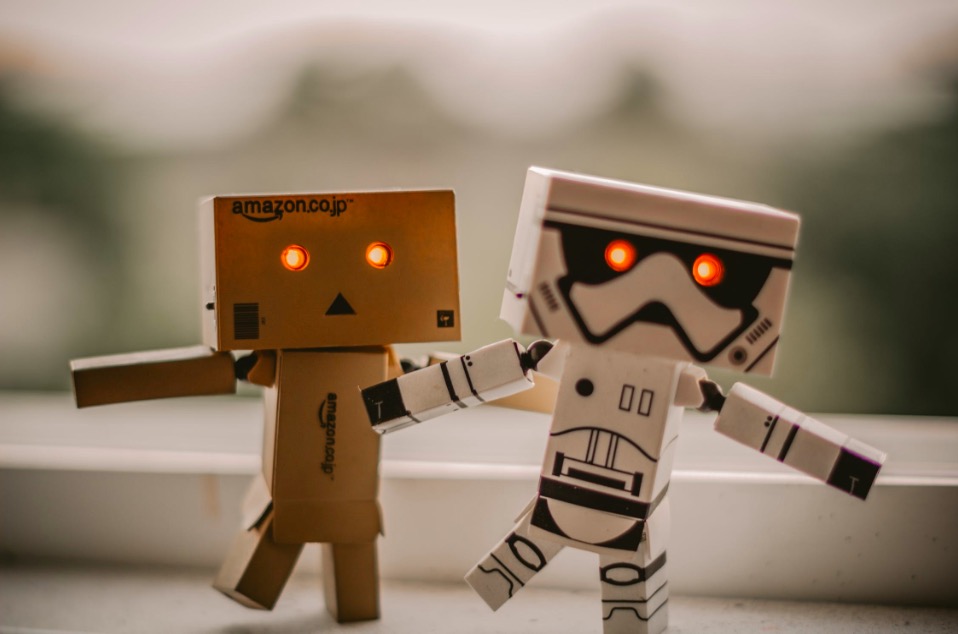

The more I think about that, the more certain I become that AI will eventually cause huge problems to the world. As such, it’s imperative that we – the tech community – consider the limits of AI – not in regards to technology, but rather in regards to safety and security. Do we want AI police officers or soldiers? That sounds dangerous. Could Russian hackers embed “Order 66” into our own robot army? Do we trust robots with firearms to make the appropriate decision in a life-or-death situation?

My intent is not to sound like an alarmist, but rather to begin thinking about the issues now. If not, we may find it’s too late to do so later.