For users of Mac or Linux-based machines, aliases and scripts can create some of the most valuable tools for increased productivity. Even if you run a Windows machine, there is a strong possibility that some machines you interact with – such as AWS – utilize a Linux-based framework.

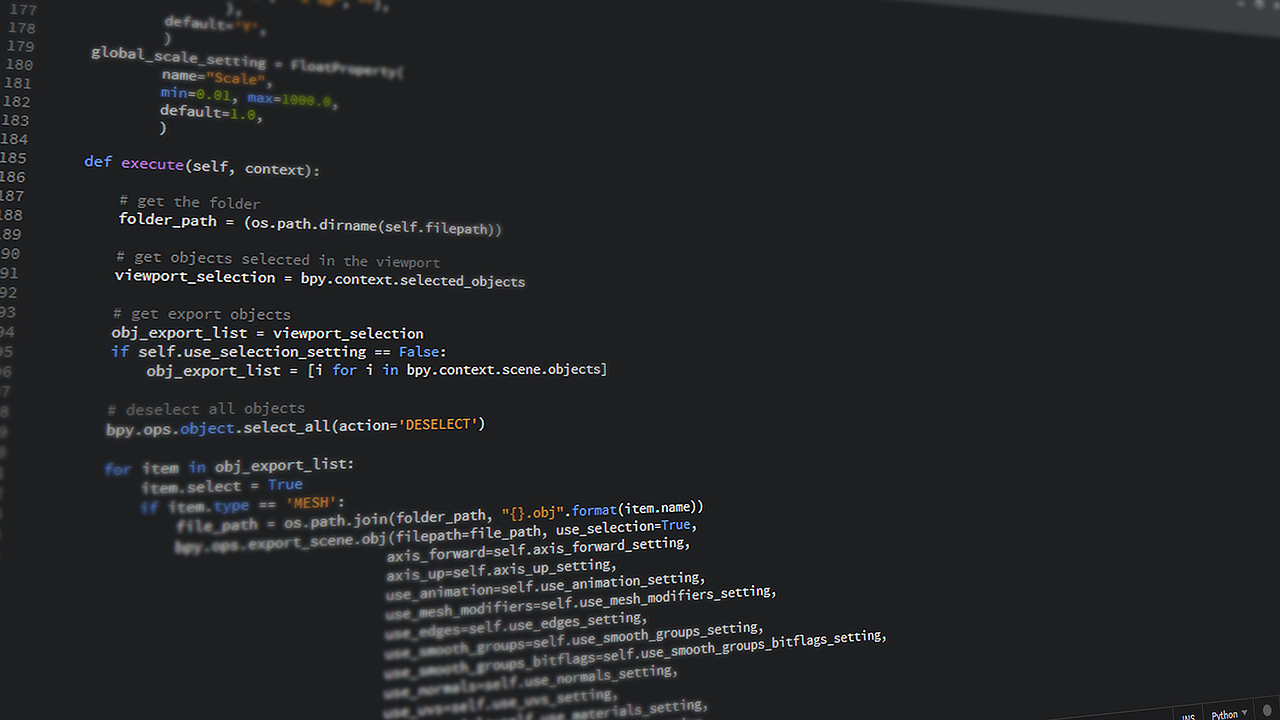

So, what are aliases and scripts? Scripts are files that contain a sequence of instructions needed to perform a complex procedure. I often create scripts to deploy software applications to development server or to execute complex software builds. Aliases are much shorter, single line commands that are typically placed in a system startup file such as the .bash_profile file on MacOS.

Below are some of the aliases I use. Since Mac doesn’t have an hd command (like Linux), I have aliased it to call hexdump -C. Additionally, Mac has no command for rot13 – a very old command to perform a Caesar cipher which I have aliased to use tr.

Since I spend a lot of time on the command prompt, I have crated a variety of aliases to shortcut directory navigation including a variety of up command to move me up the directory hierarchy (particularly useful in a large build structure) and a command to take me to the root folder of a git project.

Finally, I have a command to show me the last file created or downloaded. This can be particularly useful, for example, to view the last created file I can simply execute cat `lastfile`.

alias hd='hexdump -C'

alias df='df -h'

alias rot13="tr 'A-Za-z' 'N-ZA-Mn-za-m'"

alias up='cd ..'

alias up2='cd ../..'

alias up3='cd ../../..'

alias up4='cd ../../../..'

alias up5='cd ../../../../..'

alias up6='cd ../../../../../..'

alias root='cd `git rev-parse --show-toplevel`'

alias lastfile="ls -t | head -1"

One common script I use is bigdir. This script will show me the size of all folders in my current directory. This can help me locate folders taking up significant space on my computer.#!/bin/bash

SAVEIFS=$IFS

IFS=$(echo -en "\n\b")

for file in `ls`

do

if [ -d "$file" ]

then

du -hs "$file" 2> /dev/null

fi

done

IFS=$SAVEIFS

Another script I use helps me find a text string within the files of a folder.#!/bin/bash

SAVEIFS=$IFS

IFS=$(echo -en "\n\b")

if [ $# -ne 1 ]

then

echo Call is: `basename $0` string

else

for file in `find . -type f | cut -c3-`

do

count=`cat "$file" | grep -i $1 | wc -l`

if [ $count -gt 0 ]

then

echo "******"$file"******"

cat "$file" | grep -i $1

fi

done

fi

IFS=$SAVEIFSThese are just a few examples of ways to use scripts and aliases to improve your productivity. Do you have a favorite script or alias? Share it below!