A variety of computer certifications exist today. Those certifications fall into one of two categories – vender-neutral or vendor-specific. In the vendor-neutral category, CompTIA is the industry leader. Most well-known for their A+ certification, CompTIA has been around for 40 years and certified over 2.2 million people.

Today, CompTIA issues over a dozen IT certifications for everything from computer hardware to project management. Beyond single certifications, CompTIA also offers what it calls ‘Stackable Certifications’. These certifications are earned by completing multiple CompTIA certifications. For example, earning both A+ and Network+ certifications will result in achieving CompTIA IT Operations Specialist certification.

Hardware Certifications

Individuals who want to work with computer hardware maintenance and repair should start with the A+ certification. This exam covers basic computer hardware and Windows administration tasks. For anyone wanting to work with computers, this exam covers the fundamental knowledge required for success.

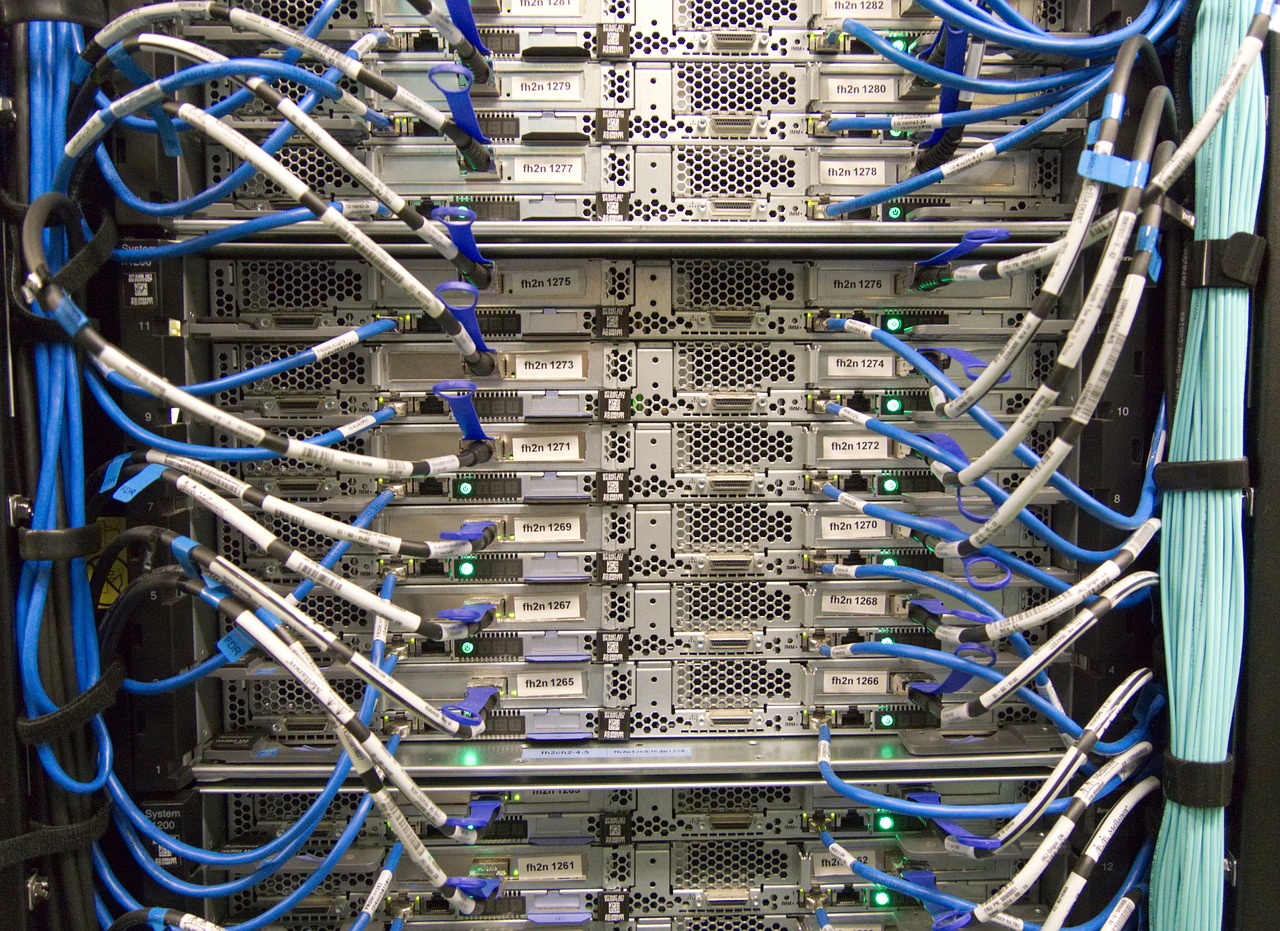

Once you have mastered computer hardware, the next step is computer networks. This knowledge is covered by CompTIA’s Network+ certification. Topics in this exam include both wireless and wired network configuration, knowledge of switches and routers, and other topics required for network administration. Note, this exam is vender-neutral. As such, knowledge of specific routers (such as Cisco) is not required.

Security Certifications

CompTIA offers a variety of security certifications for those who wish to ensure their networks are secure or to test network security. The first exam in this category is the Security+ exam. This exam covers basics of security including encryption, WiFi configuration, certificates, firewalls, and other security topics.

Next, CompTIA offers a variety of more in-depth security exams on topics such as penetration testing (PenTest+), cybersecurity analysis (CySA+) and advanced security issues (CASP+). Each of these exams continue where the Security+ exam ends and requires a far more extensive knowledge of security. With all of the security issues in the news, these certifications are in high demand among employers.

Infrastructure Certifications

CompTIA offers several tests in what it calls the ‘infrastructure’ category. These exams are particularly useful for people who administer cloud systems or manage servers. Certifications in this category include Cloud+, Server+, and Linux+. If your organization utilizes cloud-based platforms, such as AWS or Google Cloud Platform, these certifications provide a vendor-neutral starting point. However, if you really want to dive deep into topics like AWS, Amazon offers numerous exams specifically covering their platform.

Project Management Certification

While not hardware related CompTIA offers an entry-level certification for project management called Project+. This exam is less detailed and time consuming than other project management certifications but covers the essential details of project management.

Conclusion

For the aspiring techie or the individual looking to advance their career, CompTIA provides a number of useful certifications. While certifications from other vendors may cost thousands of dollars, CompTIA exams are generally under $400. This is money well spent in the competitive IT world as CompTIA is one of the most respected names in vendor-neutral IT certifications.