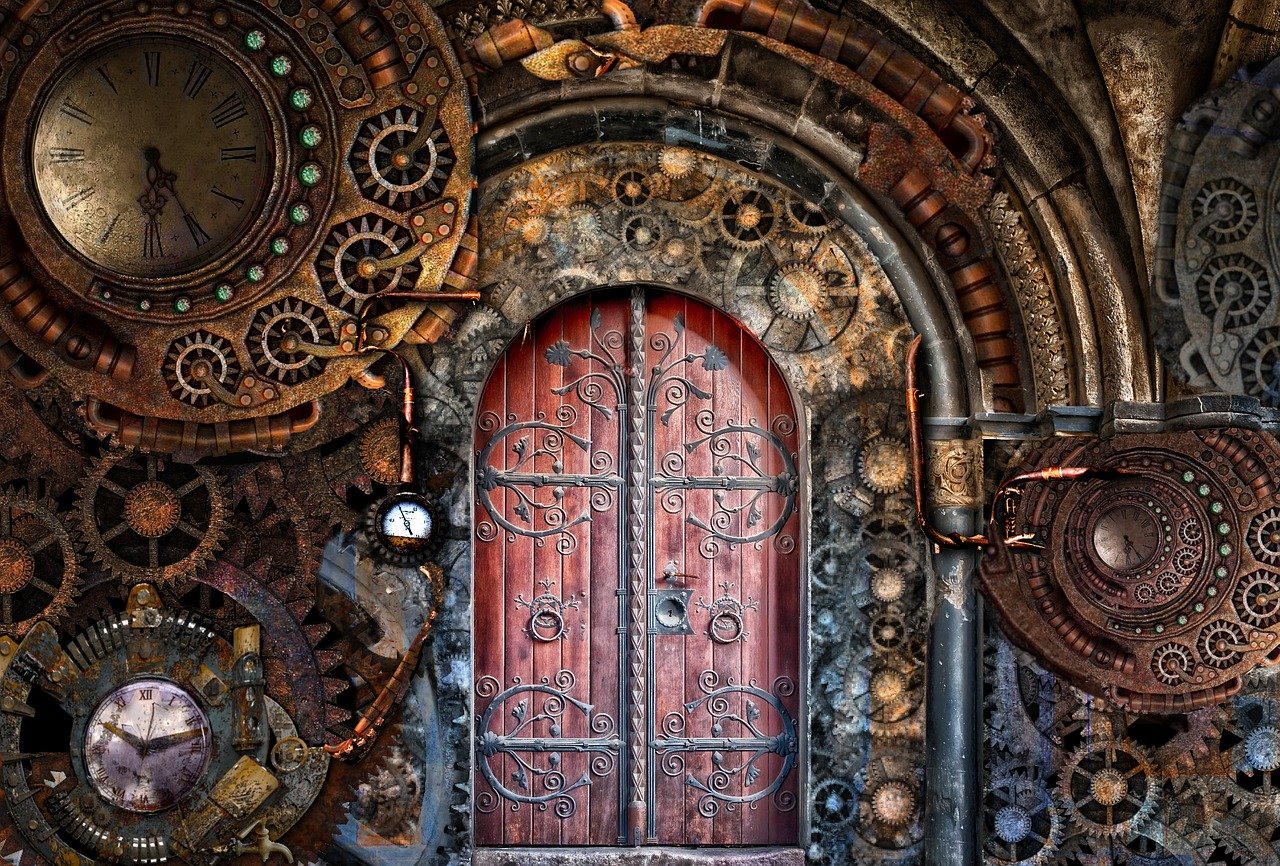

When you think of technology, what do you think of? Most people would say cell phones, computers, and other transistorized gadgets. But there’s a whole other world of technology out there that’s equally exciting and all too often forgotten by most of us – mechanical technologies. While the history of electronics is short, mechanical technologies have changed the world for thousands of years. For instance, technologies like Archimedes’ screw or the lighthouse of Alexandria were developed before the birth of Christ. But to me, no mechanical technology is quite as interesting as clocks and watches. From the sundial to the Apple watch, man has been interested in the passing of time. And while it’s easy look at smart watches as an engineering marvel, they’re really not much different from a phone or tablet in extreme miniature form. In fact, smart watches are far less exciting – technology wise – than many of the analog watches out there today. For instance, the Citizen Navihawk watch is able to set the time based on radio signals from around the world, runs on solar power, contains a circular slide rule, and shows time in multiple timezones. Containing 5 different dials and 8 hands, just looking at the watch in action is fascinating. Another interesting Citizen watch is the Altichron. While having far fewer dials than the Navihawk, it offers something even more practical – a compass and altimeter. Of coarse, there are countless other mechanical engineering marvels out there – such as automatons and mechanical robot hands. Even more exciting? The union of electronic technology and mechanical technology! Components such as reed switches and tilt switches use mechanical means to interact with electronics. Mechanical hands wired with servos allow us to create machines that can interact with the world in the same way we do. So, as we as a nation continue to focus on STEM, let’s not forget that it’s not just about electronics! The technology behind mechanical objects is just as exciting and indeed it’s the union of mechanical objects and electronics that will take move us into the future!

Flavor of the Week

The internet has had an amazing impact on the world. Not only does it allow us access to more information faster than ever possible before, but it also allows us to have access to software and libraries instantly. As a developer, I rely on tools like Maven or Gradle to automatically retrieve code libraries from the internet on demand. But this speed of disseminating information and code has also created a ‘flavor of the week’ environment for programming frameworks and technologies. Twenty years ago, if you were a professional developer you probably worked in C, C++, Visual Basic, or Delphi. Today, it’s hard to count all the different languages out there. In the Java world alone we have Java, Scala, Groovy, Clojure, Kotlin, and others. For JavaScript frameworks, developers can choose from jQuery, REACT, and Angular – which just accounts for the most popular options. With each new project, companies examine the tools available and change their development stack to utilize the latest and greatest. What used to take a decade or more to become legacy code is now obsoleted in years.

When I entered the IT world, I needed to know one programming language – that was it. Now, looking at the technical requirements provided by employers is a daunting task as each company has cherrypicked what they believe to be the best technologies in a variety of different realms. Developers are increasingly expected to be experts of an unimaginable number of technologies. What’s worse, few technologies never reach maturity before they’re completely overhauled. Consider, for instance, Angular. While it’s an excellent framework for application development, it’s nearly impossible to find answers to questions since the framework has changed so much since its creation. Long-term, the IT world is going to end up with innumerable projects that are no longer maintainable because their technology stack has become obsolete or developers have moved on to the new flavor of the week. Even worse, programmers are becoming a jack-of-all-trades which means that code quality is poorer, bugs increase, and time-to-market is increased as nobody has a mastery of anything they’re working with.

None of this is bringing any value to end users or tech companies. In fact, it’s just creating more code that will be obsolete before it’s even deployed.

Happy Employees

What is the most important asset of any organization? Is it their physical property – buildings, computers, and desks? Is it their capital reserves in the bank? How about their portfolio of intellectual property? While all of the above items are important, they pale in comparison to the value of their people. An organization’s employees take care of and use the physical property, spend capital to fund activities to grow the business, and exploit intellectual property for economic gain. It’s the people who make it all happen – without them, everything else is just an inanimate object. Unfortunately, people like Jack Welch failed to see their value and changed the market to see people as nothing but another expendable resource. In the eyes of people like Jack Welch, people are no different than the wood used to make a door, the plastic used to make a consumer good, or a glob of solder used to make electronic goods. People became a commodity instead of human beings.

How has this impacted the workplace? We see more and more that people don’t work for an organization for very long. Changing jobs every few years is increasingly popular. Of course, this is only logical – why would you want to work for an employer who didn’t value you as a human being? Businesses do whatever it takes to keep employee pay as low as possible while increasing the CEO’s pay. When annual raises come due, the lower the number the better. Employees quickly see that if they want to get ahead, they will need to find another job making more. What if employers gave raises as if each employee was a potential new hire and they wanted to ensure they took the offer?

What would happen if the business world changed to value people over profits? What if companies placed their people first – ahead of profits, ahead of the customer, and ahead of the shareholder? Sounds radical, but companies who have implemented such policies have seen higher employee retention and greater employee happiness. But most importantly, that greater happiness directly translated to happier customers who then engaged in more business with the organization and improved shareholder value. Countless books on the topic of servant leadership and employee-focused business models exist, and they all show the exact same thing – employees who feel valued are more productive and happier to be at work.

A book I recently read said that – as a small business owner – I need to “fall in love with my customer”. The notion is that I should treat my customer like they are the most important thing on earth. Treat them like I treat my wife. Treat them as if I want nothing more than to spend time with them. When I treat my customers that way, my customers won’t want to leave. That same idea applies to employees – what if businesses treated their employees like they loved them?

The Benefit of Software as a Service

One of the biggest trends in the software world over the last decade has been the migration to Software as a Service models. For people that are unfamiliar with the Term Software as a Service (abbreviated SaaS), it’s the notion of paying to use software on an ongoing subscription basis instead of purchasing a license for a software application. In the 90’s, users would go to the store, find the software they wanted, purchase the software, and then use that application forever. If a new version came out, the user would have to purchase it individually. Applications like Microsoft Office, Quicken, the Adobe product suite, and others would come out with new versions every few years, and users would have to shell out the cash for each new version or continue to use the old version. For many applications, this quickly became cost-prohibitive. For example, some applications may cost hundreds or thousands of dollars. During the last decade, that model has changed. And, while many complain about paying monthly for software, it’s actually a great benefit for the user. For example, I have a subscription to the Adobe Creative Cloud that costs me around $50/month. With that subscription, I have access to every single Adobe application out there. Likewise, Microsoft Office now costs around $100/year for the entire application suite. This lower cost means that I have access to software that I couldn’t previously afford. Furthermore, I automatically get all updates. No longer do I need to buy each new version when it comes out. Instead, updates are automatically installed on my computer. In the end, I get the most up-to-date software at a fraction of the cost I used to pay. I know some users don’t like paying monthly for an application. But for me, the benefit of having all the software I need at a fraction of the cost with access to all future versions included is well worth the monthly fee.

The Shoulders of Giants

This week saw the passing of a giant in the world of astronomy with the death of Stephen Hawking. His work has forever changed the landscape of the science realm. Throughout history, men and women of every era have driven our collective future to where it is today. As a computer programmer, who do I look to as the giant in my realm? Many people might point to Bill Gates or Steve Jobs. Indeed, both have made an impact – but their skill was in their ability to merge technology with their astute business sense. No, for me there is a much more important man who has had an impact on virtually everything in the technology world today. I am referring to Dennis Ritchie. For most Americans, the name couldn’t be more foreign. Yet, his impact could not possibly be more profound. Dennis Ritchie was responsible for the C programming language as well as the Unix operating system. Much like his name, his projects are unknown to most outside of technology. But just about everything in computer programming owes much to C. The C programming language is considered to have impacted C++, Java, ObjectiveC, C#, Go, JavaScript, PHP, and countless others. These languages run most computers, cell phones, and websites in existence today. His Unix operating system was the inspiration for Linux and numerous operating system variants. Today, Apple uses BSD (a Linux variant) as the core for both OS X and iOS. Android is a Linux variant too. If I could meet any person – living or dead – Dennis Ritchie would be high on my list. As a programmer, he is an demonstration of the power one man can have to impact the world with technology. It is humbling to consider how much further I can see simply because I have the benefit of standing on the shoulders of giants like Dennis Ritchie.

.Net Core 2.0 Woes

One of my favorite technologies is Microsoft’s .Net Core 2.0 framework. Some of the things I love are that it’s cross-platform, it has an excellent framework for interacting with databases, and their MVC model is straight forward to implement. However, with all that Microsoft has managed to accomplish, there are still some major shortcomings. (Before going forward, let me point out that I develop on a Mac using Visual Studio – so a native Windows user may have a different experience.) For example, while the Entity Framework is amazing – the support for stored procedures is less stellar. After days of searching for solutions, I finally came across a working answer. This is the biggest problem with .Net Core – lack of answers on the web. Or more properly, the lack of the right answer. Look up any question on how to do something using .Net Core and you’ll find a dozen different answers – none of which actually work. This problem was repeated over and over again. When I tried to store a simple session variable, I saw tutorial after tutorial – none of them worked. Next on the list is the NuGet package manager. As a Java user, I have grown accustomed to pulling libraries from a remote repository using Maven or Gradle – so I was happy to see similar functionality for .Net. The problem? It’s impossible to find what package contains the thing you’re looking for. Online code never includes the appropriate imports, so you’re left to guess as to what you need. This problem is exacerbated by the horrible idea of creating class extensions. Every package you import can add extensions to classes in other packages. This sounds great, but in reality it only complicates figuring out what to import. Last, the way you configure the web server is the worst I have ever seen – you configure it in code! Want to use a different port? Hard code it. Need to run the app in a subdirectory? Hard code it. Need to setup database support? Hard code it. So, as the app goes from machine to machine it actually has to be rewritten to support the configuration required on the new server. I have seen various examples of how to use configuration files to accomplish this, but like everything else the documentation is poor and inconsistent at best. In the end, while I do love what Microsoft has accomplished with .Net Core 2.0 and their MVC framework, it still has a long way to go before I would consider it to be able to seriously complete with more mature frameworks.

Web Severs

Earlier this week, I posted about the importance of analyzing requirements to determine the best technology for solving a given problem. Today, I want to dive deeper and talk about different web servers and where they best fit. Having spent well over a decade developing Java web applications, I’m often quick to assume JBoss or Tomcat is the best answer. But as this article will point out, that’s often simply not the case. (Note: for this article, I am not concerning myself with the difference between a web server and an application server. At the end of the day, if the solution solves the problem, who cares?) First on our list of servers is Apache. Apache has been around since 1995, and is often the first choice for websites. If you run a Linux machine, chances are you already have Apache installed. The real power of Apache lies in the modules you can use to add additional functionality to the server. Two of the most significant enable PHP support and running a reverse proxy. Apache with PHP would be a good choice for a WordPress site, or for eCommerce sites running PHP. However, as a site gets larger, PHP becomes more difficult to manage than other solutions. With reverse proxy support enabled, you’ll often see Apache setup to run in front of other servers – such as JBoss or Tomcat – or to enable load balancing. Speaking of JBoss and Tomcat, these are both excellent servers on their own. Both are intended to run Java web applications which – due to the massive number of available Java libraries – can do just about anything. However, all that power comes with a cost. JBoss and Tomcat servers and applications are not particularly lightweight or easy to setup, and the Java code for many common tasks will be far more complex than other solutions. For example, what if your application makes heavy use of JSON and REST services? While Java will handle this very well, a simple Node.js server may be much more lightweight. I can create a REST server in JavaScript in a fraction of the time of creating a corresponding Java server – regardless of the Java technologies used. Maybe you want all the features of JBoss or Tomcat, but you want to deploy to Microsoft’s Azure platform. Microsoft’s .NET Core platform is an excellent choice. Not only is .NET Core now cross platform (running on Linux and Mac), but their MVC framework is amazing. Furthermore, their entity framework is probably the best of any I have ever used. What about solutions for makers and tech hackers? Python has several server implementations including Flask and Django. Not only is it an excellent language for beginners, but it’s become one of the primary languages used for developing on the Raspberry Pi as well as other hardware platforms. Countless other servers could be mentioned, but it’s easy to see from the above that solutions exist for every possible web server scenario. So, when you go to chose your platform – ask yourself what platform best fits your needs. Find an engineer to analyze the problem and provide recommendations on best technologies. At the end of the day, selecting the right technology will result in a much shorter time to market and substantially lower development costs.

Requirements Analysis

One of the most important skills for a developer is the ability to analyze requirements and determine the most appropriate solution. For many developers, this means they will determine the best suite of tools that fits within their preferred development environment. For example, a Java developer may decide if JDBC or JPA is a better option for connecting to their database. Note, it was already assumed that the application would be written in Java – other options like C# or PHP were ignored because the developer making the choice was a Java developer. The problem is that there may be better options depending on the requirements of the project. For example, I am currently working on a mobile project that uses a JavaScript framework. One of the requirements of the app is to create a fairly detailed PDF document. This document was created using pdfmake – an excellent toolkit for making PDF documents based on a simple configuration file. However, as the project grew, it was requested to have a web service written that would be given the configuration, generate the PDF, and send an email. What server language would I use? I could use Java – but do I want to rewrite the entire PDF generator in a new language? Absolutely not. What else could work? In the end, I opted to use a Node.js solution. Why? Because it would be utterly trivial to use the existing JavaScript PDF code within a Node application. In fact, I managed to write all the necessary functionality in a single file with less than 100 lines of code. Had I selected Java, it would have easily grown into several dozen file, hundreds of lines of code, and substantially more billable hours.

Unfortunately, technology decisions are made every single day by organizations that insist on a language or framework before the requirements are even known. Better options may exist, but lack of knowledge of competing technologies prevents their selection. In the end, projects take longer to develop, cost more, and become increasingly difficult to maintain. Certainly no developer can be an expert in every technology, but any more senior developer should be able to provide a variety of competing solutions to any problem as well as indicate the pros and cons of each. When the best technology is selected, projects come in ahead of schedule and under budget. Time-to-market is decreased, maintenance costs are minimized, and – in the end – the organization benefits.

Text File Parsing

It’s no secret to anyone that I’m a Unix guy. I’ve worked on Unix-based systems for over 20 years now. If I’m forced to use a Windows machine, the first thing I do is install software to provide me a more Unix-like experience. I could give dozens of reasons why I love Unix such as programming environments, robust command line utilities, stability, etc. But one of the most useful to me is the ability to perform complex text manipulations from the command line. Parsing log files, editing text files on the fly, creating reports, all easy from a Unix command line. Of all the commands I use, some of my favorites are sed, tr, grep, and cut. With just those four commands I can make magic happen. For instance, today I had a configuration file for a git-o-lite server that I needed to use to generate a list of repositories from. I could open the file in a text editor and edit it… but that’s work. As a programmer, I program things so I don’t have to do trivial work. Besides, given the large number of repositories in the config file it would take too much time and be prone to error. Knowing the format of the config file, I opened up a command prompt and started stringing together commands to transform the data one step at a time. At the end, I had a list of every repository nicely sorted alphabetically. This list could then be fed into another process that might perform some maintenance task on each repository or perform some other task. In the end, my command was a long string of small commands strung together.

cat gitolite.conf | grep 'repos/' | cut -d'=' -f2 | tr ' ' '\n' | tr -d ' ' | grep -v '^$' | grep -v '@' | grep 'repos' | sort

While it may look cryptic to the uninitiated, I’ll explain each command and why it was used. First, I used the cat file to display the contents of the config file for git-o-lite. The cat command is useful for displaying text data from a command prompt in Unix, and is almost always the starting point for any Unix text-processing. Next, the grep command is executed to find all lines containing the text ‘repos/’ which I know is the starting point for all the repository names in the configuration file. Grep is another commonly used Unix command that is used to search a file for a text string and display matching rows. Numerous versions of grep exist providing all kinds of advanced functionality, but basic grep is still the most commonly used. Now that I have all lines containing repository names, I can begin to process that list. I start by using cut to remove the variable names. Since Git-o-lite allows variables to defined (@var = value), and I only want the value, I will tell cut to split on the equal sign delimiter (-d’=’) and give me only the second field (-f2). Since multiple repository names may be on a single line, I next need to split the data on the space so that each repository is on it’s own line. The tr command will translate one character to another. So, in this instance, I will change ‘ ‘ to ‘\n’ (\n is a newline character – like hitting the return key on the keyboard). Next, I delete any remaining spaces using using the -d flag for the tr command. At this point, my output contains empty lines which I want to remove. The -v argument for grep will remove lines containing the supplied search string. Here, the cryptic ‘^$’ is used where ^ is the beginning of the line and $ is the end of the line (these are standard grep patterns). Next, I run through a few more grep commands to cleanup the data and then pipe the content to the sort function. Now, I have my list of repositories ready for whatever follow on processing I want. Last step, copy the above commands to a shell script so that I don’t have to type all those commands in again.

Throughout my career, I have used the above process innumerable times. I can extract any manner of text data from a file quickly and easily simply by using a string of commands to incrementally transform the data to my desired output. If you are a programmer, and you’re not familiar with the above commands, you’re probably working too hard.

LaTeX?

Numerous times throughout my life I have wanted to write a book. I even have several manuscripts on my computer in various stages of completion. During the last month, I decided to try again. Step one was to decide what I wanted to write about. So, I selected about 10 topics and chose one for my first book. Step two, determine what tool I would use to write my book. A variety of software applications are available including Microsoft Word, Apple Pages, and Adobe InDesign. But one of my requirements is that the format be text-based so that I can easily store the document as well as the history in my Git repository. Initially, I thought that Markdown would be a great format – text-based, easy to store in a source repository, and no special software required. However, after a short amount of work, I realized that Markdown didn’t really support the things I would want for writing. The first issue was with inadequate support for page layouts including images. Other issues followed, and I went back to the drawing board searching for a better alternative. While searching the internet, I found numerous sites recommending LaTeX. I never used LaTeX before, but I do remember seeing it referenced throughout the years on Linux systems. Could this be the answer? Does it meet my requirements? LaTeX is similar to HTML or Markdown. Character sequences can be used to indicate things like the table of contents, a chapter, a section, etc. Additional functionality can be added by using packages to fill all manner of typography needs. The files are text, and can easily be managed by a source control system and edited from any standard text editor. What about the output? I can easily run the LaTeX commands to output my document in PDF format, and I have even read that tools exist for conversion to ePub. When I add new chapters or update existing content, I can have my Jenkins server automatically build the full document and email it to me or publish in any why I choose. While I have only been tinkering with LaTeX for a short time, I have been very impressed with what I’ve been able to accomplish so far and intend to master this amazing tool to create documents and manuscripts.