Last week, we talked about distance calculations for Artificial Intelligence. Once you’ve learned how to calculate distance, you need to learn how to calculate an overall error for your algorithm. There are three main algorithms for error calculation. Sum of Squares, Mean Squared, and Root Mean Squared. They are all relatively simple, but are key to any Machine Learning algorithm. As an AI algorithm iterates over data time and time again, it will try to find a better solution than the previous iteration. A lower error score indicates a better answer and progress toward the best solution.

The error algorithms are similar to the distance algorithms. However, distance measures how far apart two points are whereas error measures how far the AI output answers are from the expected answers. The three algorithms below show how each error is calculated. Note that each one builds on the one before it. The sum of squares error is – as the name suggests – a summation of the square of the errors of each answer. Note that as the number of answers increases, the sum of squares value will too. Thus, to compare errors with different numbers of values, we need to divide by the number of items to get the mean squared error. Finally, if you want to have a number in a similar range to the original answer, you need to take the square root of the mean squared error.

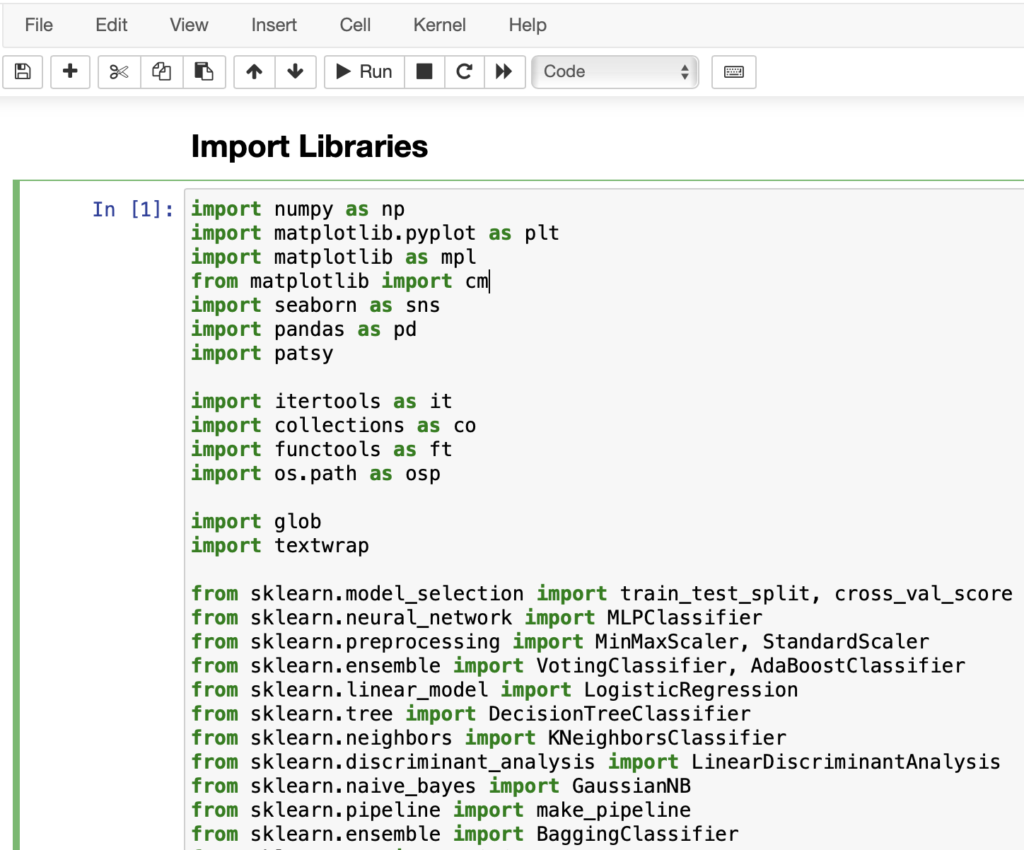

public static float sumOfSquares(final float[] expected, final float[] actual) {

float sum = 0;

for(int i = 0; i < expected.length; ++i) {

sum += Math.pow(expected[i] - actual[i], 2);

}

return sum;

}

public static float meanSquared(final float[] expected, final float[] actual) {

return sumOfSquares(expected, actual)/expected.length;

}

public static float rootMeanSquared(final float[] expected, final float[] actual) {

return (float)Math.sqrt(meanSquared(expected,actual));

}