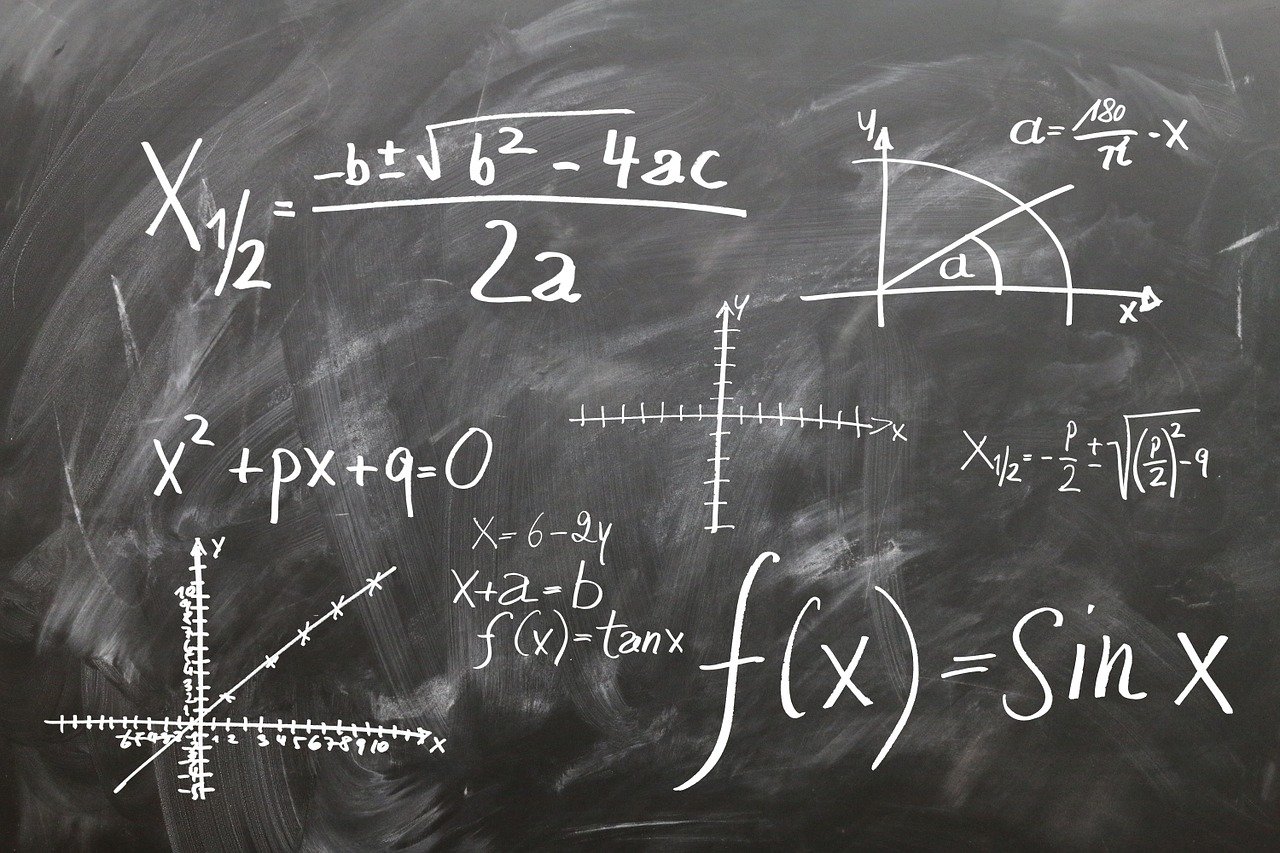

Computer Vision is a rapidly growing technology field that most people know little about. While Computer Vision has been around since the 1960s, it’s growth really exploded with the creation of the OpenCV library. This library provides the tools necessary for software engineers to create Computer Vision applications.

But what is Computer Vision? Computer Vision is a mix of hardware and software tools that are used to identify objects from a photo or camera input. One of the more well-known applications of Computer Vision is in self-driving cars. In a self-driving car, numerous cameras collect video inputs. The video streams are then examined to find objects such as road signs, people, stop lights, lines on the road, and other data that would be essential for safe driving.

However, this technology isn’t just available in self-driving cars. A vehicle I rented a few months ago was able to read speed limit signs as I passed by and display that information on the dash. Additionally, if I failed to signal a lane change, the car would beep when I got close to the line.

Another common place to find Computer Vision is in factory automation. In this setting, specialized programs may monitor products for defects, check the status of machinery for leaks or other problematic conditions, or monitor the actions of people to ensure safe machine operation. With these tools, companies can make better products more safely.

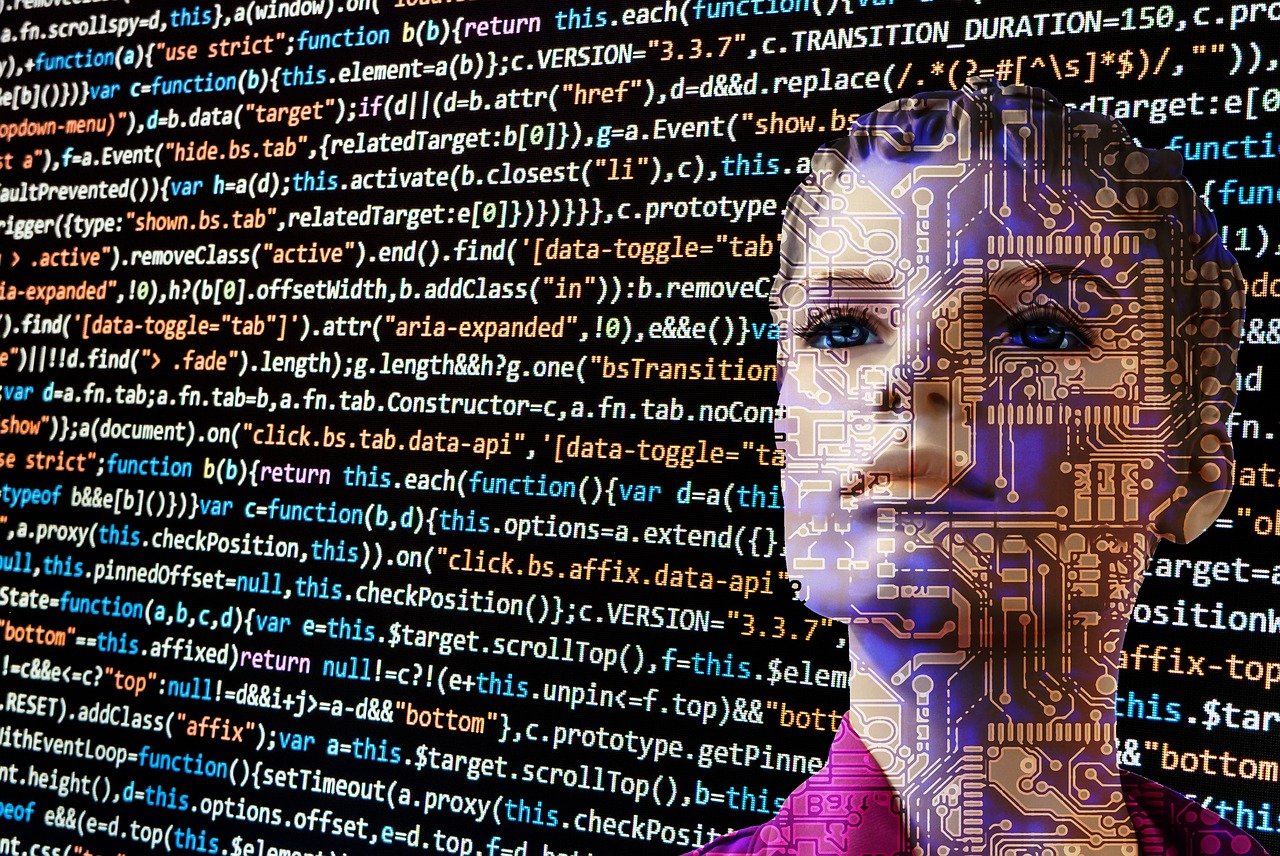

Computer Vision and Artificial Intelligence are also becoming more popular for medical applications. Images of MRI or X-Ray scans can be processed using Computer Vision and AI tools to identify cancerous tumors or other problematic health issues.

From a less practical view, Computer Vision tools are also used to modify videos of user content. This may include things such as adding a hat or making a funny face. Or, it may be used to identify faces in an image for tagging.

Ultimately, Computer Vision technologies are being found in more and more places each day and, when coupled with AI, will ultimately result in a far more technologically advanced world.