The world has gone through some amazing transformations during the last half a century. In the early 80’s computers were a rarity and cell phones were a novelty of wealthy business executives. During the 90’s, all that changed with the creation of Windows 95, which was really a pivotal point in the history of technology. Now, for the first time ever, computers were easy enough for the home user to use. A decade later, Apple would develop the iPhone followed by Google’s Android platform which would change the face of technology again. Today, computers and cell phones are ubiquitous.

My Experiences

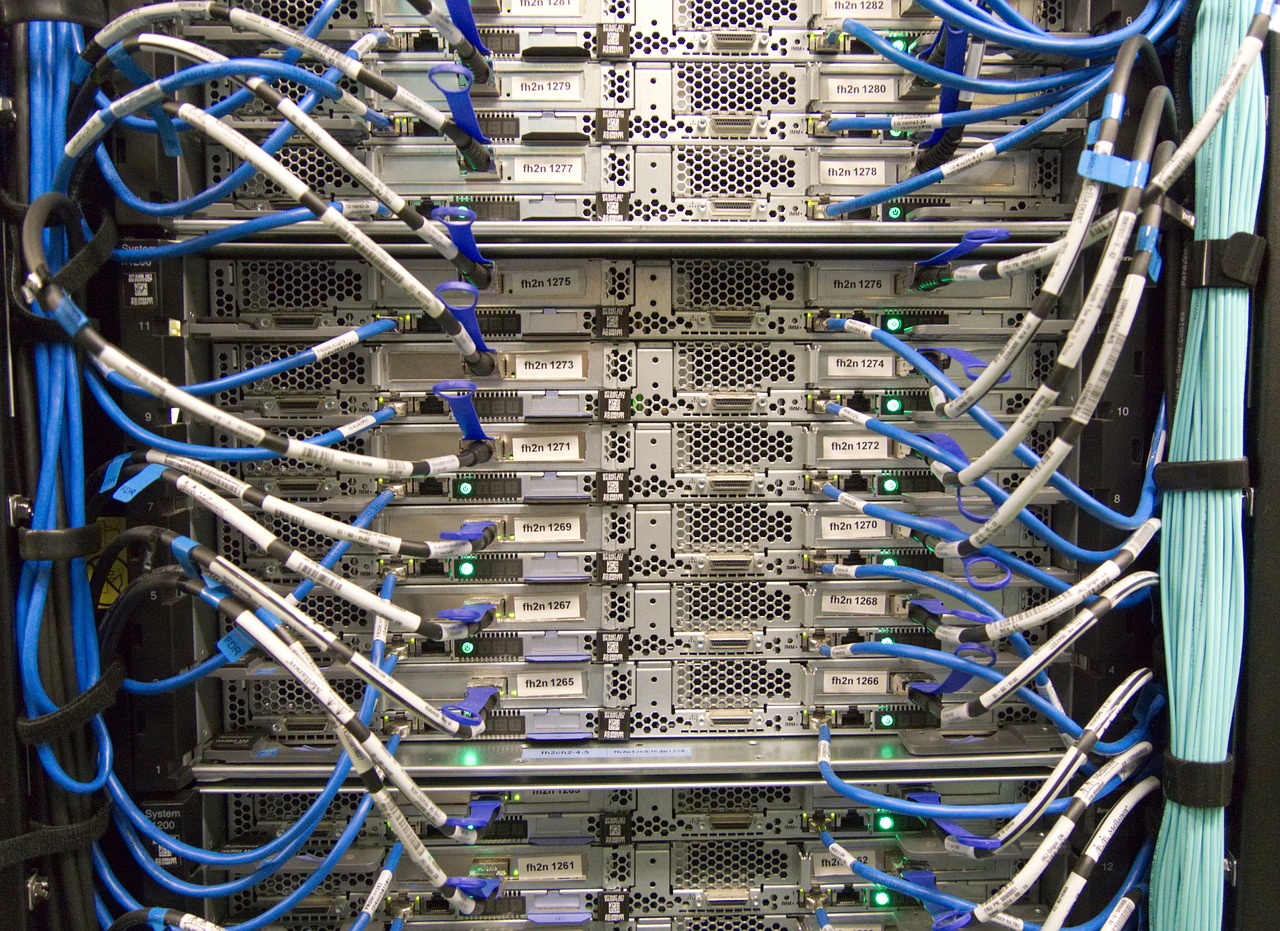

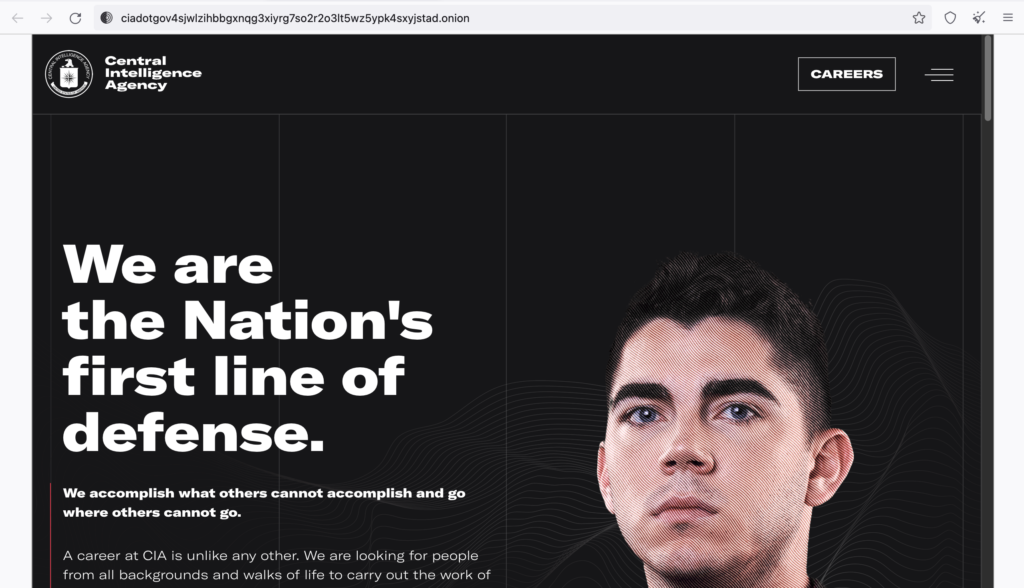

Being born in the late 70’s, I have been able to witness this transformation first hand. In addition, I have had the incredible opportunity to take part in the creation of technologies myself. My career began in the US Army in 1995 where I served as a member of the Intelligence Community. I learned to use and administer SunOS and Solaris machines, and began my experimentation with programming. It was in this environment that I developed a love for Unix-based systems that continues to this day.

On those Unix machines, I started programming in C, C++, TCL, Perl, and Bourne Shell. While my first programs were pretty bad, I would eventually have an opportunity to write code for a classified government project. That code earned me a Joint Service Achievement Medal as well as making a profound impact within the intelligence community at the time.

After leaving the Army, I entered the civilian workforce to develop Point-of-Sale applications using C++. I would spend nearly two decades developing code for a variety of companies using various platforms and languages. I developed low-level code for phone systems, created custom Android operating systems, and programmed countless web and mobile applications.

Today

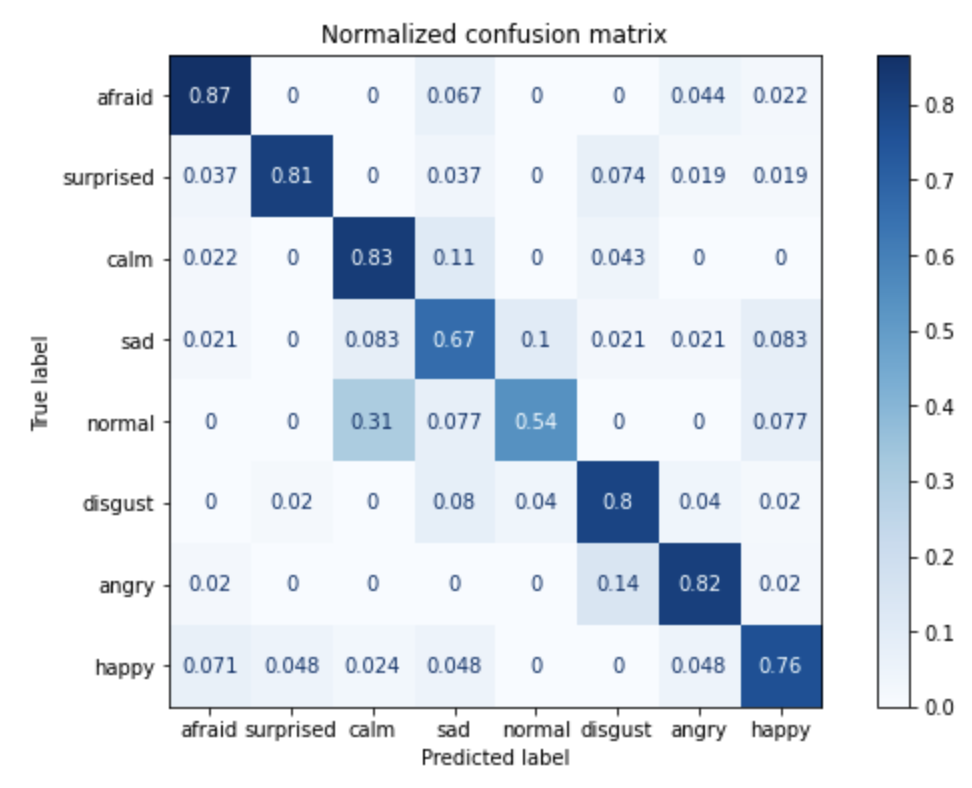

Now, I run my own business developing software for clients and creating artificial intelligence solutions. But when I look back, I am always amazed at how far the technology revolution has brought us, and I am thankful that I have had a chance to be a part of that revolution! With 20 years left before I retire, I can’t imagine where technology will take us tomorrow. Yet, I can’t imagine not being a part of that future!

For those who were born in the 90’s or in the new millennium, you will never know how much the world has changed. But for my generation, we watched it happen – and many of played a part in making it happen!